Microsoft Is One Step Closer to Local LLMs With Their Newest Phi-Silica SLM That Runs Directly on Copilot+ Laptop NPUs

We’ve all pondered the question of when we’re going to get local AI on our devices. While Chat GPT, Gemini, and Copilot are nice to have, the ability to run local AI models on your PC that don’t need to ping to a server, or be in danger of censorship is great. Microsoft is bringing this idea that much closer with its new Phi-Silica model, which they’ve dubbed as a Small Language Model or SLM for short.

We’ve all pondered the question of when we’re going to get local AI on our devices. While Chat GPT, Gemini, and Copilot are nice to have, the ability to run local AI models on your PC that don’t need to ping to a server, or be in danger of censorship is great. Microsoft is bringing this idea that much closer with its new Phi-Silica model, which they’ve dubbed as a Small Language Model or SLM for short.

It’s made to be embedded into all of the new Copilot+ laptops, which will finally make use of the NPUs (Neural Processing Units) that all these semiconductor companies have been including in their CPUs and SoCs.

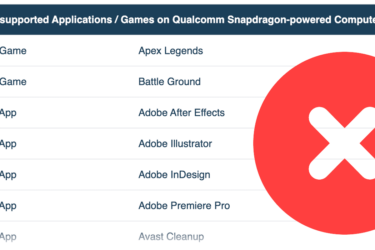

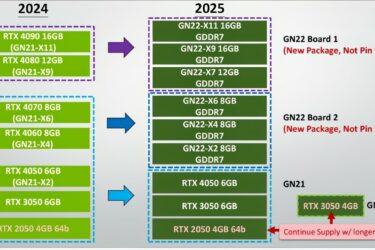

So far, the only announced Copilot+ laptops are Snapdragon-powered, but Intel and AMD are sure to join as soon as they can, so you can imagine that all PCs and laptops from 2025 onward will come with local LLMs onboard.

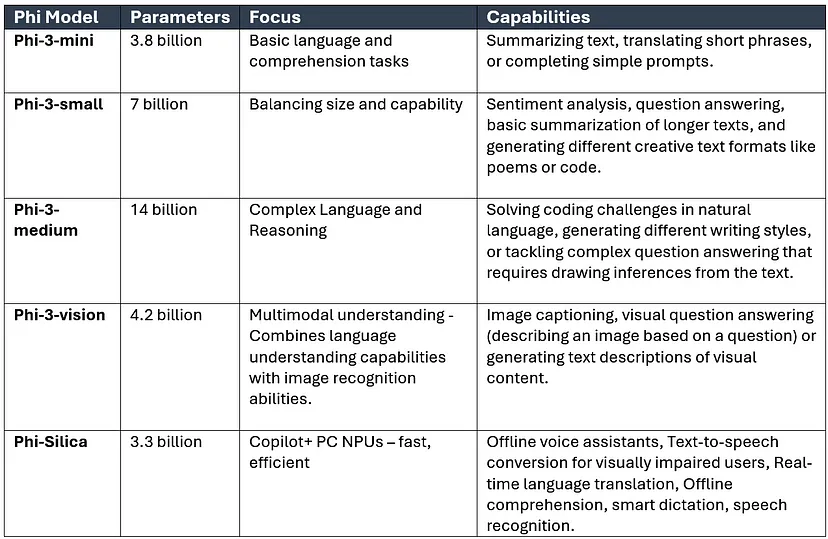

Phi-Silica is the smallest of Microsoft’s Phi series of Language Models, with 3.3 billion parameters. LLM parameters dictate the behavior of a language model. With more parameters, the AI can use more training data to make a decision, which then is filtered through to create the output that you see.

Microsoft revealed that the latency of the model is 650 tokens per second, which is just a silly way of measuring it. We had to do some calculations on our own, but in the end, the latency of Phi-Silica is just 1.5 ms, which is rather fast, so expect prompts to be delivered quickly and without any slowdowns. The SLM will consume about 1.5W of power, so it won’t be a big resource hog and slow down your other work. The token generation is handled in the CPU KV cache, generating about 27 tokens per second.

So far, it looks like Phi-Silica might be Copilot+ exclusive, however, if Microsoft decides to open-source it, it could open the door to AI-powered wearables and many other devices.

If you’re a developer, check out how Microsoft is adding Phi-Silica to the Windows SDK, so you can create AI-powered Windows apps that run locally.